10 Technologies That Will Reshape SCM Software | Quarter 1 2018, Issue of Supply Chain Quarterly

Print this Article | Send to Colleague

By Kelly Thomas | Reprinted from the the Quarter 1 2018 issue of Supply Chain Quarterly

Today's supply chain management software can't keep pace with the continuous change that's challenging business models almost across the board. Together, 10 key technologies could solve that problem and enable the cutting-edge capabilities companies will need in the future.

Supply chain management software is in need of an overhaul. Current SCM solutions are based on architectures that were created in the 1990s; these solutions, used for planning and managing assets, inventory and people to deliver products and services to customers, have been enhanced and upgraded, but their underlying architectures remain the same. As a result, they are limited in their ability to rapidly address the significant and continuous change brought on by increasing customer expectations and new competition. In fact, the speed with which enterprise SCM software adopts new ideas and technologies is significantly slower than the pace of technology changes, particularly those made available to consumers. In many cases, SCM software has contributed to the inertia preventing companies from delivering on continuously elevated customer expectations in an economic way.

At the same time, companies across all industries are grappling with how to leverage the explosion of new technologies to transform themselves into "digital enterprises." In a digital enterprise, physical assets are increasingly augmented or replaced by digital technologies and software. This makes assets intelligent, improving their capabilities and allowing them to communicate their status. As a result, enterprises are able to improve their operations and provide unique, tailored customer experiences.

|

[Figure 1] The traditional planning-execution funnel

[Figure 2] SCM software bingo board

[Figure 3] Improvement curve compariso

[Figure 4] Emerging SCM software architecture

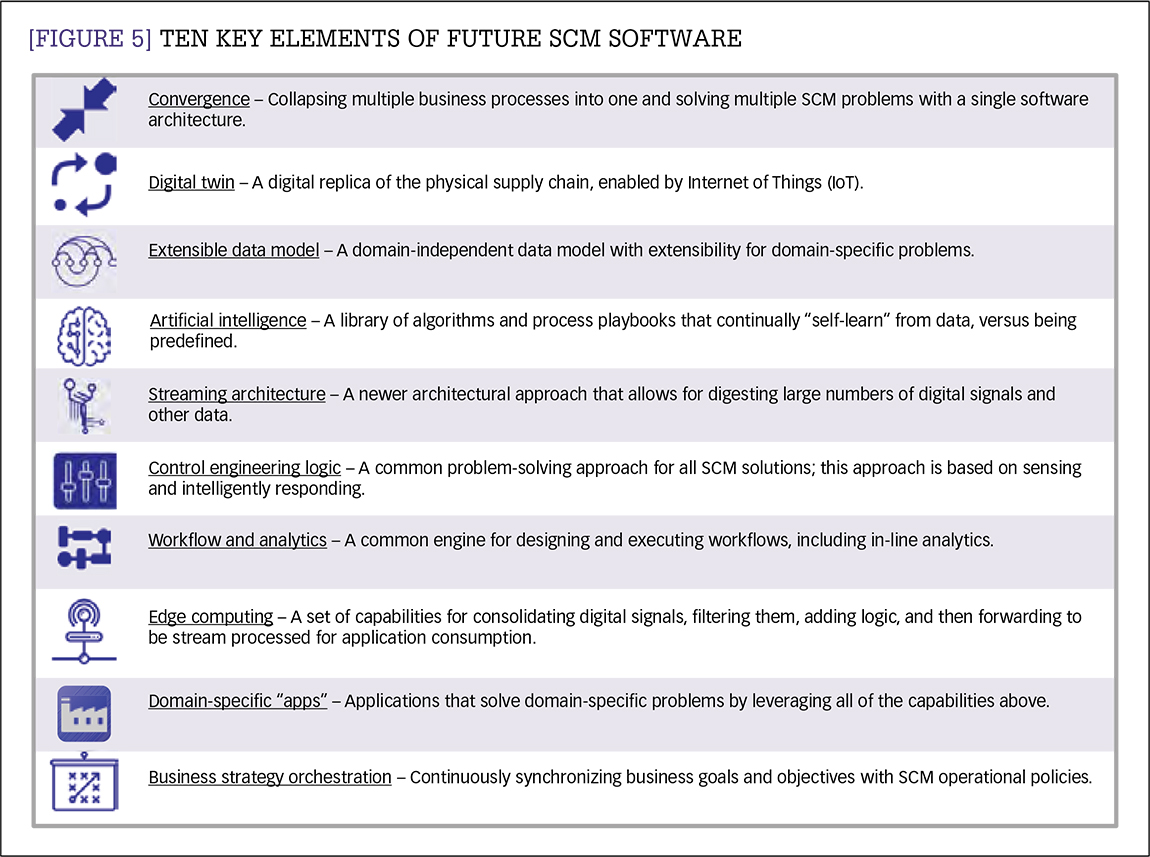

[Figure 5] Ten key elements of future SCM software

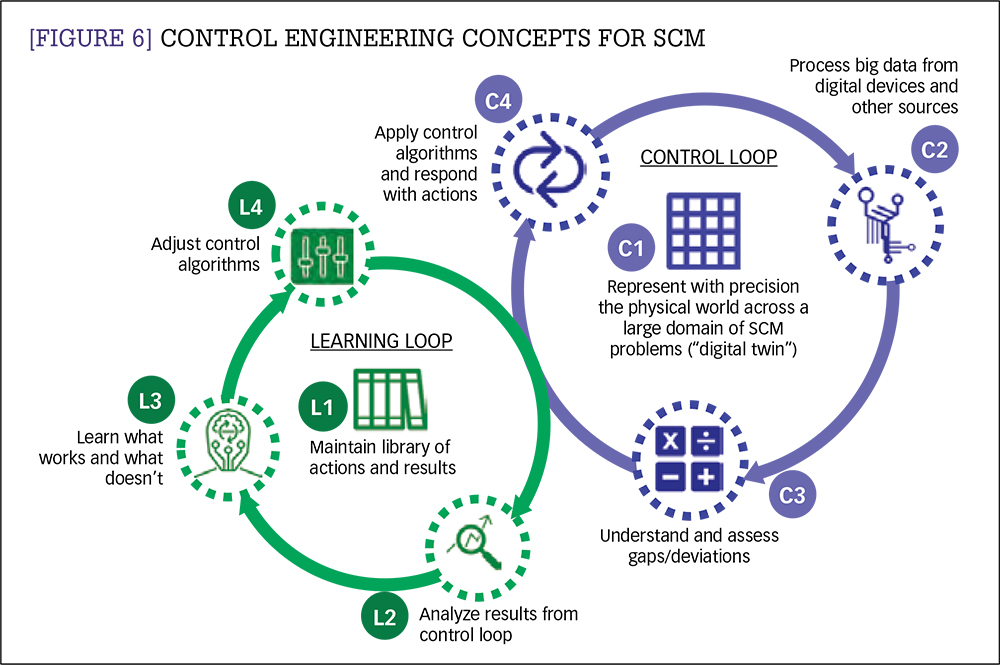

[Figure 6] Control engineering concepts for SCM |

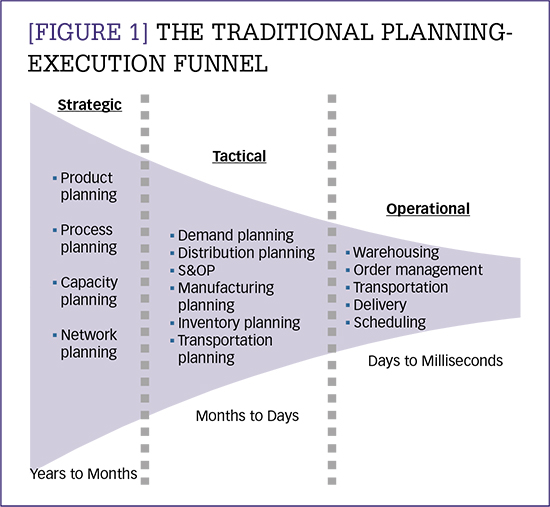

This situation does not mean that new solutions have not come to market; the venture capitalist market for SCM software is robust, with hundreds of millions of dollars deployed annually.1 Established players also continue to invest hundreds of millions of dollars every year to enhance and upgrade existing solutions. While this level of investment is both welcome and encouraging, it is not sufficient in and of itself to ensure that supply chain software will be able to meet the business needs of the future. Investments must be targeted toward bringing together technologies in a comprehensive way that addresses core challenges that are preventing companies from transforming more quickly to new business models. With that in mind, this article first examines the current state of SCM software, and then describes 10 key technology elements that will be necessary to support cutting-edge supply chain management in the future. Supply chain software must bring together these 10 elements in order to enable companies to transform more swiftly to new business models. What's the problem? This dynamic has led to long-held beliefs that solutions for different time dimensions and different functions require fundamentally different architectures; this further reinforces organizational boundaries along the supply chain and is an impediment to breakthrough improvements. At the same time, companies need synchronization across time and function in order to effectively compete. For example, making the best economic decision about where to source inventory to satisfy an online order requires functional synchronization of order management, warehouse management and transportation as well as, in some cases, store operations. It further requires synchronization with upstream demand planning, manufacturing planning, and inventory deployment to ensure inventory is in the optimal location when the order comes in. Companies attempt to achieve this synchronization by investing millions of dollars in integration code to stitch together disparate architectures. As much as 30-50 percent of supply chain-transformation program budgets go toward integration, and it is a common source of project cost overruns.2 That we have gotten into this situation is completely normal. Peter Senge of the Massachusetts Institute of Technology (MIT) described this dynamic in his systems work in the 1990s: "From a very early age we are taught to break apart problems, to fragment the world. This apparently makes complex problems more manageable, but we pay a hidden enormous price. We can no longer see the consequences of our actions; we lose our intrinsic connection to a larger whole. When we try to "see the big picture," we try to reassemble the fragments in our minds, to list and organize all the pieces. The task is futile ... after a while we give up trying to see the whole altogether."3 |

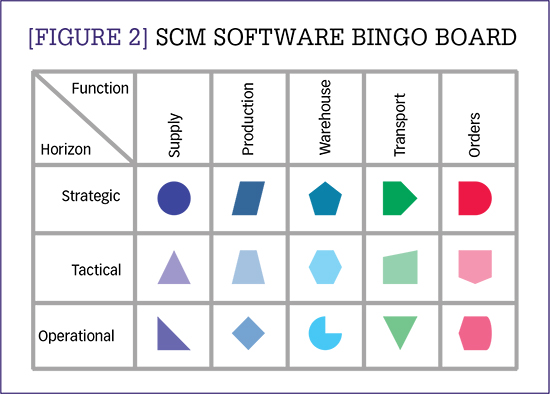

The net result of 40 years of SCM software development, including 20-plus years of commercial off-the-shelf software, is that the SCM software landscape in place in most companies is a hodgepodge of solutions that looks like a bingo game board. This is illustrated in Figure 2, where each shape in the grid represents a different solution with a different architecture. The consequences of this situation seep into all aspects of SCM software. The systems are difficult to change, do not easily adapt to changing business processes, learn only through manual intervention, and are difficult to synchronize cross-functionally back to enterprise business goals.

Future of SCM software: 10 key elements

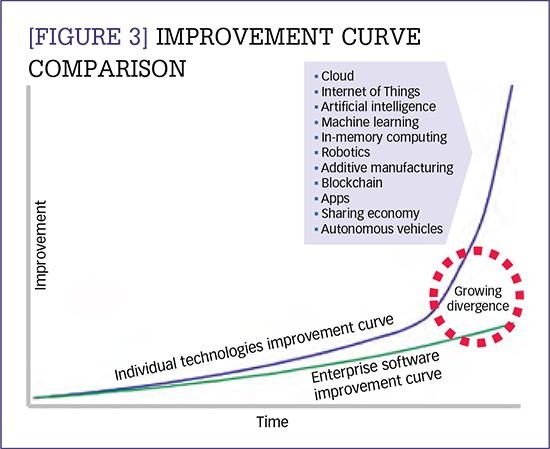

Meanwhile, over the past 10 years the world has witnessed a technology revolution. Technologies that have been around for years and had been following an incremental improvement curve have suddenly, simultaneously, and rapidly improved. This has happened to robotics, artificial intelligence, machine learning, 3-D printing, and digital intelligence, which is now found in just about every previously electromechanical device, including thermostats, motor vehicles, industrial controllers, toys, and consumer products.4 Digital intelligence is the foundation of the Internet of Things (IoT), where physical objects have embedded, microprocessor-based intelligence that can be communicated via the Internet.

As shown in Figure 3, a significant divergence has emerged between the pace of improvement in enterprise software and improvement in individual technologies; this is particularly true for technologies made available to consumers, whether in smartphones, "smart" homes, or in consumer products such as automobiles. By contrast, for various structural reasons, enterprise software, such as that used for production planning, warehouse management, and transportation management, has followed a much more linear improvement curve and has yet to experience the type of rapid improvement seen in individual technologies. "Bending the improvement curve upward" will require the adoption of a new architecture with new elements.

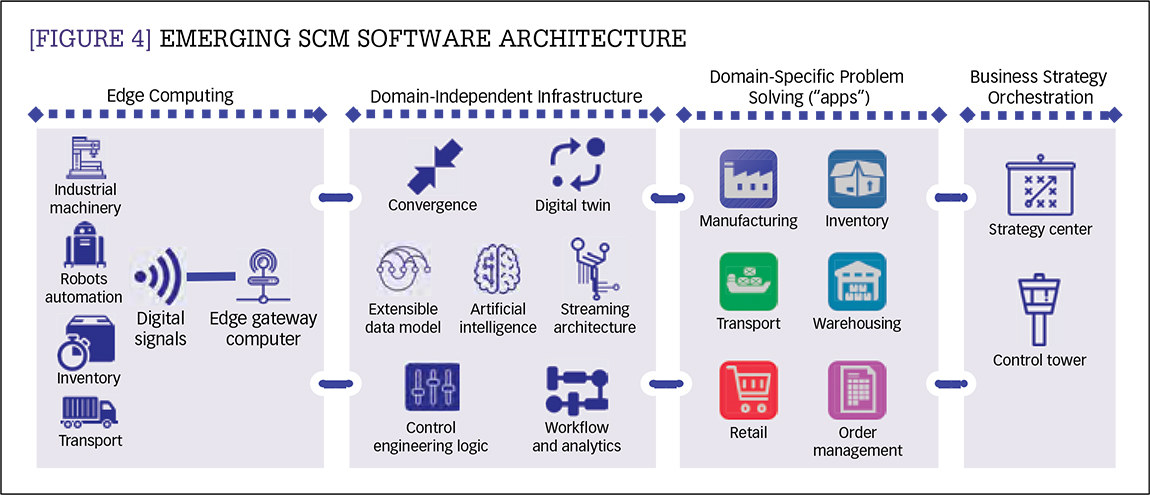

Elements of a new business and technical architecture for SCM software have been emerging over the course of the past five years. This emerging architecture, shown in Figure 4 and summarized in Figure 5, is based on business and technical concepts that are enumerated and described below. The architecture and its various elements offer great promise in addressing the issues previously discussed.

1. Convergence

The emerging business and technical architecture for SCM solutions is based on convergence of business processes and time. What does this mean? To draw an analogy, when Steve Jobs introduced the first iPhone in 2007, he started by saying he was introducing three devices: 1) a music player; 2) an Internet-connection device; and 3) a phone. (He could have added a fourth device -- the camera.) And, he said, the three are incorporated into a single device based on a single architecture. This is known as convergence, and it immediately disrupted the individual markets for music players, Internet-connection devices, phones, and cameras. Likewise, the business architecture of tomorrow will see increasing process convergence and collapsing of time boundaries between planning and execution.

These concepts are not far-fetched; leading companies such as Procter & Gamble are already collapsing their demand, supply, sales and operations planning (S&OP), and channel management processes into a single process executed by a single team and supported by a single technology. This was first reported by the website Logistics Viewpoints in 2015.5 The days of having to buy separate software solutions for demand, supply, S&OP, and channel management are numbered. The same can be said across the different functional domains represented in Figure 2.

2. Digital twin

Supply chain management software operates by first creating a computer data model of the real world. Logic and algorithms are then run against the model to arrive at answers and decisions. These answers are then operationalized into the real world. The quality of answers or decisions generated by the software depends heavily on the quality of the data model, or how well the model represents reality at the point in time at which the answers are generated. This is true across the decision-making landscape of SCM—manufacturing, distribution, transportation, and warehousing.

In today's digital world, it has become common to refer to these data models as "digital twins." In other words, the data model needs to be an identical twin of the real world at all points in time. This can only happen when the model is very robust—that is, it is flexible enough to represent all real-world entities and scenarios—and it can be brought up-to-date instantaneously. This second point is a core tenet of the digital enterprise and a key promise of the Internet of Things. Previously, there was a lag, or latency, between what was going on in the real world and what was represented by the model, such that suboptimal answers were often generated by the software. Because supply chain resources -- things and people -- can now transmit their status instantaneously, computer models will increasingly be synchronized with the real world, thereby enabling the digital twin.

In-memory computing (IMC) is one of the core enabling technologies behind the digital twin. IMC allows data models to be stored in memory, versus on a physical hard drive. This provides the speed necessary for enabling the digital twin. While IMC has been used for supply chain software for a couple of decades now, recent advances allow it to be scaled to handle much larger problems, including those that require the processing of a large number of digital signals from the Internet of Things.

3. Extensible data model

Future SCM software will have general-purpose data models with extensibility across functional domains, meaning the data model can represent manufacturing, distribution, and warehousing, for example. At the dawn of packaged SCM software in the 1990s, pioneers set this as a key objective. For a number of technical and business reasons, this objective was not achieved. Instead, SCM software evolved toward built-for-purpose, proprietary data models. For each new problem space in SCM, a new data model and new set of software was developed. This contributed to the "bingo board" problem described in Figure 2.

Supply chain software requires robust data models that can precisely represent myriad relationships and use cases across diverse environments. Precision has become more important as supply chains increasingly have to deliver products when, where, and how consumers desire them. The combination of the digital twin and an extensible data model provides the means to deliver much more precision when synchronizing operations across, for example, retail, distribution, and manufacturing.

4. Artificial intelligence

Future SCM software will be characterized by its ability to "self-learn." This means it will adapt and make decisions by itself, without human intervention. This will be made possible by artificial intelligence (AI) and, more specifically, machine learning, which is software that can learn from data versus being completely driven by rules configured by humans. AI allows software to take on more of the decision-making load associated with managing supply chains. This is most prominent in fields such as robots and self-driving trucks, where actions are self-directed based on the software's ability to learn. In SCM software, AI will start by providing suggestions to humans, and then eventually be used to automate decisions.

The state of the art of learning in SCM software today is manual trial-and-error. When new situations arise that the software currently does not cover, the software is either reconfigured or the code itself is modified to accommodate the new situation. Either way is expensive and time consuming. Those responses are also ineffective given that supply chains and supply chain problems are highly dynamic and changing all the time. Machine learning will be critical to providing increasingly sophisticated response algorithms as part of the control engineering loop shown in Figure 6. For example, as part of today's S&OP process, software provides decision options that can be applied when demand and supply do not match. These options -- change a price, run overtime, or increase supply, to name a few examples -- are ridgidly defined. By contrast, machine learning promises to learn and come up with new response options, including sophisticated multivariate options.

5. Streaming architecture

Streaming architecture has emerged in the past five years to help solve problems requiring real-time processing of large amounts of data. This architecture will be increasingly important to SCM software, as solutions need to enable the digital twin in order to support supply chains' precise synchronization across time and function. There are two major areas in streaming architecture: streaming and stream processing. Streaming is the ability to reliably send large numbers of messages (for example, digital signals from IoT devices), while stream processing is the ability to accept the data, apply logic to it, and derive insights from it. The digital twin discussed earlier is the processing part of the streaming architecture.

6. Control engineering logic

Control engineering is an engineering discipline that processes data about an environment and then applies algorithms to drive the behavior of the environment to a desired state. For example, manufacturing continuously processes data about the state of machines, inbound materials, and progress against the order backlog, and then runs algorithms that direct the release of materials to achieve production goals. This is known as a control loop. The key concepts are shown in Figure 6, with a "control" loop executing continuously, and a "learning" loop adjusting the control algorithms based on results from each pass of the control loop.

This concept is embedded in just about all functions related to supply chain management. S&OP, for example, has an objective function: a financial goal; resources and people must be mobilized to achieve that objective function. When there is a deviation (in control engineering this is known as the "error") between the objective function and what is actually happening, corrective action must be taken. This corrective action could be something like reducing prices, increasing inventory, or working overtime. In the future, these corrective actions will be increasingly aided by artificial intelligence. Whether it's in supply, manufacturing, distribution, warehousing, or order management, much of the work involved in SCM is focused on reducing the "error" between what the objective is and what is actually happening in the real world.

7. Workflow and analytics

Workflow defines the steps a worker carries out to accomplish a particular activity, task, or unit of work. In regard to software, this often means the sequence of screens, clicks, and other interactions a user executes. In most cases today, these steps are rigid and predefined; changing them requires configuration or even software code changes which could take weeks or months.

Having a common, flexible workflow engine across SCM functional domains is critical to achieving convergence in supply chain software. This provides the ability to support many different use cases and interactions with the software, not just within functional domains but also across domains.

Integrated into each workflow are both logic and analytics. These analytics help predict things like demand, the impact of a promotion, or the precise arrival of an inbound ship, to name just a few possibilities. Analytics are now headed in the direction of prescribing answers to problems. For example, predicting demand is important, but it's equally important to know what to do when the prediction does not match the plan. This is where prescriptive analytics can help -- providing insights into what to do when reality does not match the operational plan. Artificial intelligence can also enable prescriptive analytics by continuously learning from past decisions.

Analytics used to be an offline, after-the-fact activity to determine what had happened. In other words, it had its own workflow that was separate from operational workflows. While this is helpful for looking in the rearview mirror, it is limited in its ability to help with what is currently happening. Analytics that are built in-line to operational workflows provide a dynamic, up-to-the minute view, versus the offline model, which might provide a week-old or even a month-old view.

8. Edge computing

Cloud computing, a centralized form of computing often accessed over the Internet, is now being augmented with localized computing, also known as "edge computing." (The term refers to being "out on the edge" of the cloud, close to where "smart" machinery is located.) Edge computing has rapidly evolved to address issues associated with processing data from the billions of microprocessor-equipped devices that are being connected to the Internet of Things.

The growth of edge computing is necessary for a number of reasons:

1. Network latency is a real concern. Latency refers to the turnaround time for sending a message and receiving a response. The turnaround time for sending and receiving information to and from the cloud may take 100-200 milliseconds. With localized, or edge, computing, the turnaround time may be 2-5 milliseconds. In real-time production or warehousing environments, this is a critical requirement. Furthermore, the variability in response times in cloud computing is much higher than with localized computing.

2. Machines, inventory, and connected "things" generate millions of digital signals per minute. Sending all of these to the cloud is impractical. Thus, edge computers play a critical role in determining what needs to be sent to the cloud, and what can be filtered or thrown out. For example, a machine might report on its capacity every second. If the reported information has not changed, or changed only within a small band, there may be no need to send it along to the cloud.

3. Some machines, inventory, and things operate in environments with no Internet connection or with Internet connections that are unstable. For example, ships at sea may not have Internet connections until they are close to port, while warehouses in emerging markets may have unreliable network connections. In situations like these, an edge computer can be used to process the signals locally; these signals are then forwarded to the cloud when a connection is available.

SCM technical architectures will increasingly be a mix of edge and cloud computing. For example, in Figure 4, the area labeled "Edge Computing" will be local to where the devices are located, and the rest of the diagram will be run in the cloud.

9. Domain-specific "apps"

While a certain set of capabilities can be abstracted into a domain-independent infrastructure (as shown in Figure 4), there is still the need for unique use cases in different domains such as manufacturing, transportation and warehousing. Often, though, companies encounter new use cases that cannot be handled by existing software. Rather than engage in costly and time-consuming configuration or reprogramming, they increasingly are taking a cue from the consumer world where an entire "app economy" has been built on common smartphone infrastructures. This type of thinking has started to find its way into enterprise software, so that leading end user companies as well as packaged-software companies are currently migrating their infrastructures to this type of structure.

For example, many companies want to "own" the data associated with their customers because the decisions they make based on that data are increasingly the battleground of competitive differentiation. These companies are creating environments where internal staff and external software providers can develop "value-add" apps that are useful in mining and making decisions against customer data. An example is an inventory-replenishment app that looks at customer data for a given category and augments inventory-deployment decisions with an algorithm that provides new insights based on a unique combination of weather, local events, holidays and chatter in social media.

10. Business strategy orchestration

One of the persistent challenges in supply chain management is how to achieve continual synchronization between function-based operational areas and the overall business goals of the enterprise. This includes synchronization both within and across functional areas. For example, at the enterprise level, the goal could be high growth and low margin, low growth and high margin or all points in between. Furthermore, these goals may differ by business unit, product line and even by product and customer. These goals must be translated into operational policies, which then must be configured into SCM software.

As time goes on, SCM software will increasingly have an orchestration layer that creates and maintains alignment of the policies that govern each functional area. This will happen through two key constructs: the strategy dashboard, which maintains business goals and translates them to operational policies; and the control tower, which provides cross-functional visibility for the entire supply chain as well as control mechanisms to steer supply chain decisions. Over the past five years, control towers have captured the imagination of SCM professionals and C-level executives, with many companies attempting to create control room-type environments, even in boardrooms. That these constructs are called control towers or control rooms is further validation of the importance of the control engineering logic discussed earlier

Start now

Now is the time to forge a step function away from the 20-year old approaches that are still in use and continue to prevent companies from achieving breakthroughs. Many organizations are already starting down that path. Open-source technologies such as Kafka (streaming), Ignite (in-memory computing), Camunda (workflow), TensorFlow (machine learning/artificial intelligence), and Hadoop (analytics), to name a few, are lowering barriers to entry for everyone. As has been widely reported, leading end users, such as Walmart, are now taking advantage of these technologies to build their own solutions, tailored to their own operations and built around the concepts in this article. Software companies need to develop similar solutions and make them available for all to use.

Together, the 10 elements discussed in this article provide a preview of the future of supply chain management software. They represent a significant step toward resolving persistent function-and-time synchronization problems and should be helpful to supply chain professionals and solution providers that are looking to tackle these problems.

Notes:

1. Worldlocity analysis of venture capitalist and private equity investment in supply chain management software companies.

2. Worldlocity analysis of transformation programs over the past 20 years.

3. Peter M. Senge, The Fifth Discipline: The Art & Practice of the Learning Organization (New York: Doubleday, 2006).

4. Erik Brynjolfsson and Andrew McAfee, The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies (New York: W.W. Norton & Company, 2014).

5. Steve Banker, "Procter & Gamble's Supply Chain Control Tower," Logistics Viewpoints, July 14, 2015, logisticsviewpoints.com/2015/07/14/procter-gambles-supply-chain-control-tower

Kelly Thomas is a supply chain management professional and CEO of Worldlocity, a research and advisory firm focusing on supply chain management software.